# Make sure the ValidMind Library is installed

%pip install -q validmind

# Load your model identifier credentials from an `.env` file

%load_ext dotenv

%dotenv .env

# Or replace with your code snippet

import validmind as vm

vm.init(

# api_host="...",

# api_key="...",

# api_secret="...",

# model="...",

)ValidMind for model validation 2 — Start the model validation process

Learn how to use ValidMind for your end-to-end model validation process with our series of four introductory notebooks. In this second notebook, independently verify the data quality tests performed on the dataset used to train the champion model.

You'll learn how to run relevant validation tests with ValidMind, log the results of those tests to the ValidMind Platform, and insert your logged test results as evidence into your validation report. You'll become familiar with the tests available in ValidMind, as well as how to run them. Running tests during model validation is crucial to the effective challenge process, as we want to independently evaluate the evidence and assessments provided by the model development team.

While running our tests in this notebook, we'll focus on:

- Ensuring that data used for training and testing the model is of appropriate data quality

- Ensuring that the raw data has been preprocessed appropriately and that the resulting final datasets reflects this

For a full list of out-of-the-box tests, refer to our Test descriptions or try the interactive Test sandbox.

Our course tailor-made for validators new to ValidMind combines this series of notebooks with more a more in-depth introduction to the ValidMind Platform — Validator Fundamentals

Prerequisites

In order to independently assess the quality of your datasets with notebook, you'll need to first have:

Refer to the first notebook in this series: 1 — Set up the ValidMind Library for validation

Setting up

Initialize the ValidMind Library

First, let's connect up the ValidMind Library to our model we previously registered in the ValidMind Platform:

In a browser, log in to ValidMind.

In the left sidebar, navigate to Inventory and select the model you registered for this "ValidMind for model validation" series of notebooks.

Go to Getting Started and click Copy snippet to clipboard.

Next, load your model identifier credentials from an .env file or replace the placeholder with your own code snippet:

Load the sample dataset

Let's first import the public Bank Customer Churn Prediction dataset from Kaggle, which was used to develop the dummy champion model.

We'll use this dataset to review steps that should have been conducted during the initial development and documentation of the model to ensure that the model was built correctly. By independently performing steps taken by the model development team, we can confirm whether the model was built using appropriate and properly processed data.

In our below example, note that:

- The target column,

Exitedhas a value of1when a customer has churned and0otherwise. - The ValidMind Library provides a wrapper to automatically load the dataset as a Pandas DataFrame object. A Pandas Dataframe is a two-dimensional tabular data structure that makes use of rows and columns.

from validmind.datasets.classification import customer_churn as demo_dataset

print(

f"Loaded demo dataset with: \n\n\t• Target column: '{demo_dataset.target_column}' \n\t• Class labels: {demo_dataset.class_labels}"

)

raw_df = demo_dataset.load_data()

raw_df.head()Verifying data quality adjustments

Let's say that thanks to the documentation submitted by the model development team (Learn more ...), we know that the sample dataset was first modified before being used to train the champion model. After performing some data quality assessments on the raw dataset, it was determined that the dataset required rebalancing, and highly correlated features were also removed.

Identify qualitative tests

During model validation, we use the same data processing logic and training procedure to confirm that the model's results can be reproduced independently, so let's start by doing some data quality assessments by running a few individual tests just like the development team did.

Use the vm.tests.list_tests() function introduced by the first notebook in this series in combination with vm.tests.list_tags() and vm.tests.list_tasks() to find which prebuilt tests are relevant for data quality assessment:

tasksrepresent the kind of modeling task associated with a test. Here we'll focus onclassificationtasks.tagsare free-form descriptions providing more details about the test, for example, what category the test falls into. Here we'll focus on thedata_qualitytag.

# Get the list of available task types

sorted(vm.tests.list_tasks())# Get the list of available tags

sorted(vm.tests.list_tags())You can pass tags and tasks as parameters to the vm.tests.list_tests() function to filter the tests based on the tags and task types.

For example, to find tests related to tabular data quality for classification models, you can call list_tests() like this:

vm.tests.list_tests(task="classification", tags=["tabular_data", "data_quality"])Refer to our notebook outlining the utilities available for viewing and understanding available ValidMind tests: Explore tests

Initialize the ValidMind datasets

With the individual tests we want to run identified, the next step is to connect your data with a ValidMind Dataset object. This step is always necessary every time you want to connect a dataset to documentation and produce test results through ValidMind, but you only need to do it once per dataset.

Initialize a ValidMind dataset object using the init_dataset function from the ValidMind (vm) module. For this example, we'll pass in the following arguments:

dataset— The raw dataset that you want to provide as input to tests.input_id— A unique identifier that allows tracking what inputs are used when running each individual test.target_column— A required argument if tests require access to true values. This is the name of the target column in the dataset.

# vm_raw_dataset is now a VMDataset object that you can pass to any ValidMind test

vm_raw_dataset = vm.init_dataset(

dataset=raw_df,

input_id="raw_dataset",

target_column="Exited",

)Run data quality tests

Now that we know how to initialize a ValidMind dataset object, we're ready to run some tests!

You run individual tests by calling the run_test function provided by the validmind.tests module. For the examples below, we'll pass in the following arguments:

test_id— The ID of the test to run, as seen in theIDcolumn when you runlist_tests.params— A dictionary of parameters for the test. These will override anydefault_paramsset in the test definition.

Run tabular data tests

The inputs expected by a test can also be found in the test definition — let's take validmind.data_validation.DescriptiveStatistics as an example.

Note that the output of the describe_test() function below shows that this test expects a dataset as input:

vm.tests.describe_test("validmind.data_validation.DescriptiveStatistics")Now, let's run a few tests to assess the quality of the dataset:

result2 = vm.tests.run_test(

test_id="validmind.data_validation.ClassImbalance",

inputs={"dataset": vm_raw_dataset},

params={"min_percent_threshold": 30},

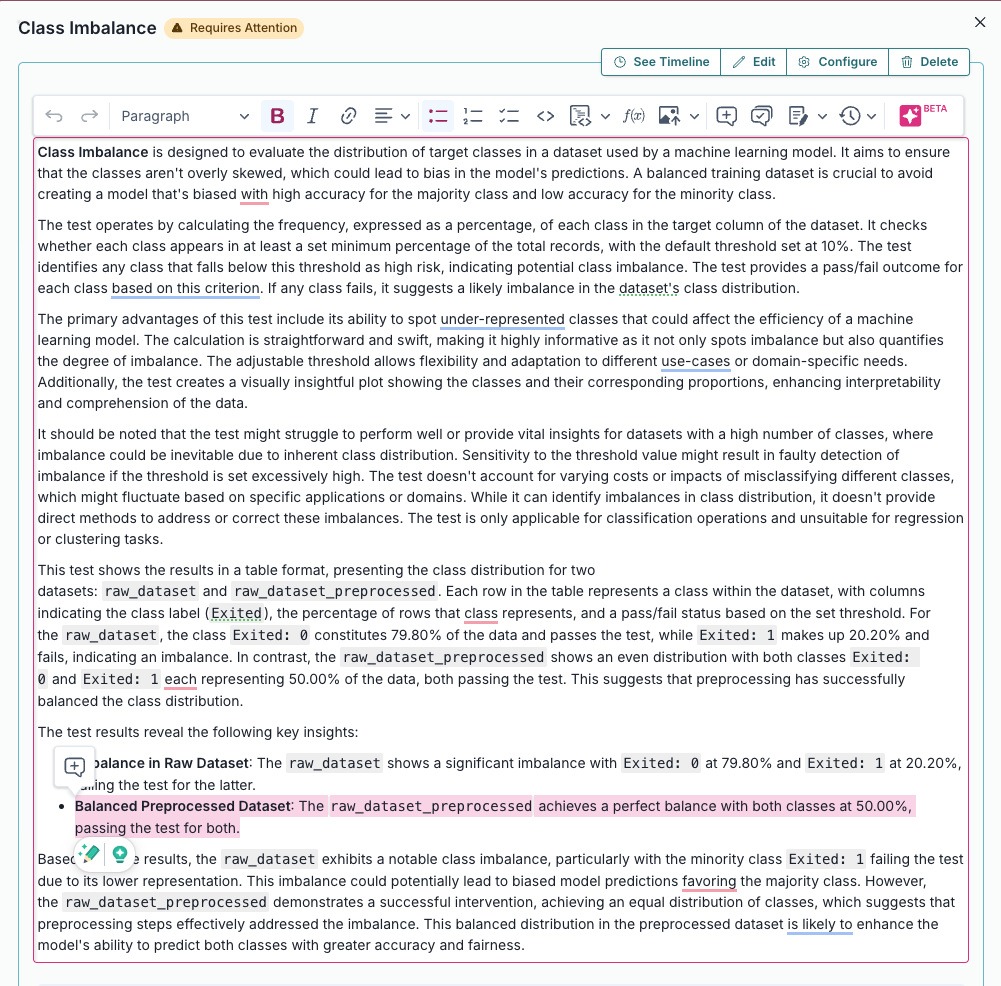

)The output above shows that the class imbalance test did not pass according to the value we set for min_percent_threshold — great, this matches what was reported by the model development team.

To address this issue, we'll re-run the test on some processed data. In this case let's apply a very simple rebalancing technique to the dataset:

import pandas as pd

raw_copy_df = raw_df.sample(frac=1) # Create a copy of the raw dataset

# Create a balanced dataset with the same number of exited and not exited customers

exited_df = raw_copy_df.loc[raw_copy_df["Exited"] == 1]

not_exited_df = raw_copy_df.loc[raw_copy_df["Exited"] == 0].sample(n=exited_df.shape[0])

balanced_raw_df = pd.concat([exited_df, not_exited_df])

balanced_raw_df = balanced_raw_df.sample(frac=1, random_state=42)With this new balanced dataset, you can re-run the individual test to see if it now passes the class imbalance test requirement.

As this is technically a different dataset, remember to first initialize a new ValidMind Dataset object to pass in as input as required by run_test():

# Register new data and now 'balanced_raw_dataset' is the new dataset object of interest

vm_balanced_raw_dataset = vm.init_dataset(

dataset=balanced_raw_df,

input_id="balanced_raw_dataset",

target_column="Exited",

)# Pass the initialized `balanced_raw_dataset` as input into the test run

result = vm.tests.run_test(

test_id="validmind.data_validation.ClassImbalance",

inputs={"dataset": vm_balanced_raw_dataset},

params={"min_percent_threshold": 30},

)Documenting test results

Now that we've done some analysis on two different datasets, we can use ValidMind to easily document why certain things were done to our raw data with testing to support it. Every test result returned by the run_test() function has a .log() method that can be used to send the test results to the ValidMind Platform.

When logging validation test results to the platform, you'll need to manually add those results to the desired section of the validation report. To demonstrate how to add test results to your validation report, we'll log our data quality tests and insert the results via the ValidMind Platform.

Configure and run comparison tests

Below, we'll perform comparison tests between the original raw dataset (raw_dataset) and the final preprocessed (raw_dataset_preprocessed) dataset, again logging the results to the ValidMind Platform.

We can specify all the tests we'd ike to run in a dictionary called test_config, and we'll pass in the following arguments for each test:

params: Individual test parameters.input_grid: Individual test inputs to compare. In this case, we'll input our two datasets for comparison.

Note here that the input_grid expects the input_id of the dataset as the value rather than the variable name we specified:

# Individual test config with inputs specified

test_config = {

"validmind.data_validation.ClassImbalance": {

"input_grid": {"dataset": ["raw_dataset", "raw_dataset_preprocessed"]},

"params": {"min_percent_threshold": 30}

},

"validmind.data_validation.HighPearsonCorrelation": {

"input_grid": {"dataset": ["raw_dataset", "raw_dataset_preprocessed"]},

"params": {"max_threshold": 0.3}

},

}Then batch run and log our tests in test_config:

for t in test_config:

print(t)

try:

# Check if test has input_grid

if 'input_grid' in test_config[t]:

# For tests with input_grid, pass the input_grid configuration

if 'params' in test_config[t]:

vm.tests.run_test(t, input_grid=test_config[t]['input_grid'], params=test_config[t]['params']).log()

else:

vm.tests.run_test(t, input_grid=test_config[t]['input_grid']).log()

else:

# Original logic for regular inputs

if 'params' in test_config[t]:

vm.tests.run_test(t, inputs=test_config[t]['inputs'], params=test_config[t]['params']).log()

else:

vm.tests.run_test(t, inputs=test_config[t]['inputs']).log()

except Exception as e:

print(f"Error running test {t}: {str(e)}")That's expected, as when we run validations tests the results logged need to be manually added to your report as part of your compliance assessment process within the ValidMind Platform.

Log tests with unique identifiers

Next, we'll use the previously initialized vm_balanced_raw_dataset (that still has a highly correlated Age column) as input to run an individual test, then log the result to the ValidMind Platform.

When running individual tests, you can use a custom result_id to tag the individual result with a unique identifier:

- This

result_idcan be appended totest_idwith a:separator. - The

balanced_raw_datasetresult identifier will correspond to thebalanced_raw_datasetinput, the dataset that still has theAgecolumn.

result = vm.tests.run_test(

test_id="validmind.data_validation.HighPearsonCorrelation:balanced_raw_dataset",

params={"max_threshold": 0.3},

inputs={"dataset": vm_balanced_raw_dataset},

)

result.log()Add test results to reporting

With some test results logged, let's head to the model we connected to at the beginning of this notebook and learn how to insert a test result into our validation report (Need more help?).

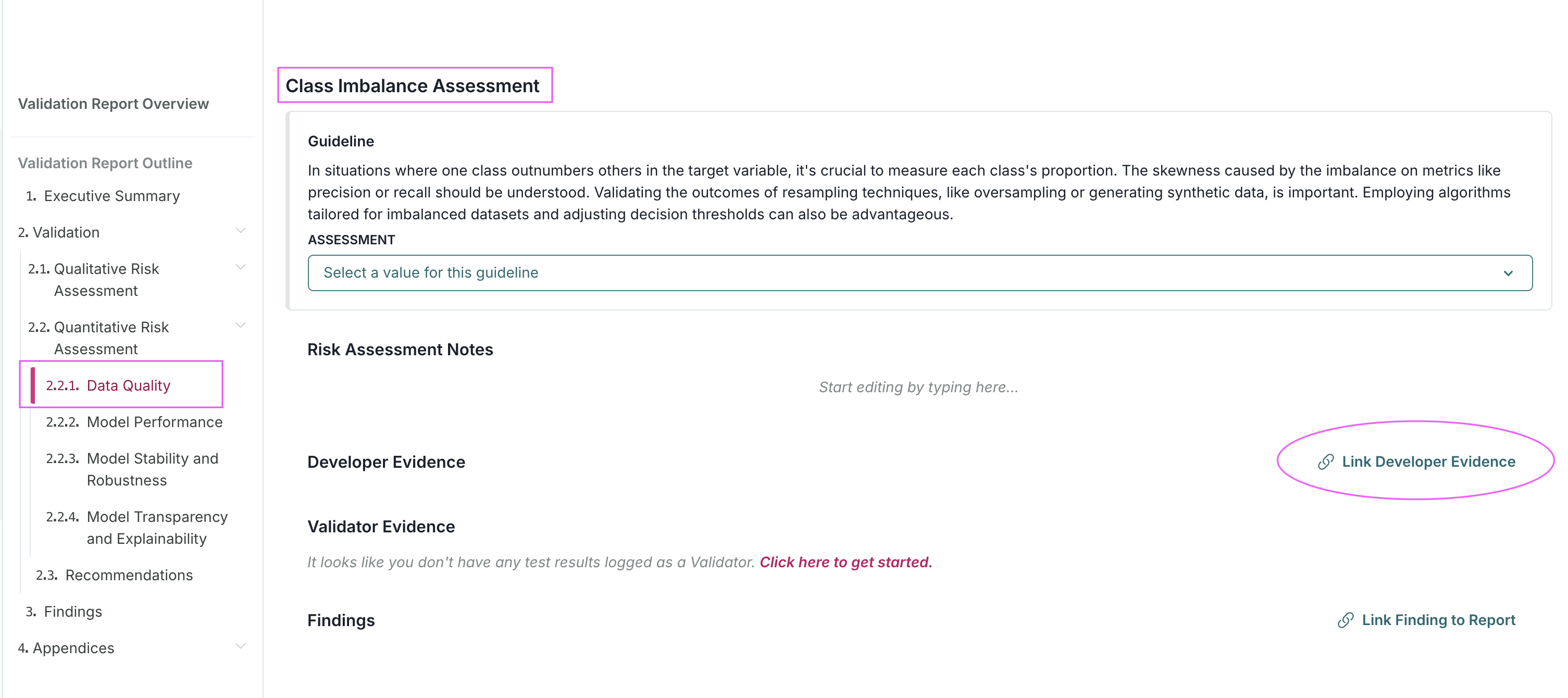

While the example below focuses on a specific test result, you can follow the same general procedure for your other results:

From the Inventory in the ValidMind Platform, go to the model you connected to earlier.

In the left sidebar that appears for your model, click Validation Report under Documents.

Locate the Data Preparation section and click on 2.2.1. Data Quality to expand that section.

Under the Class Imbalance Assessment section, locate Validator Evidence then click Link Evidence to Report:

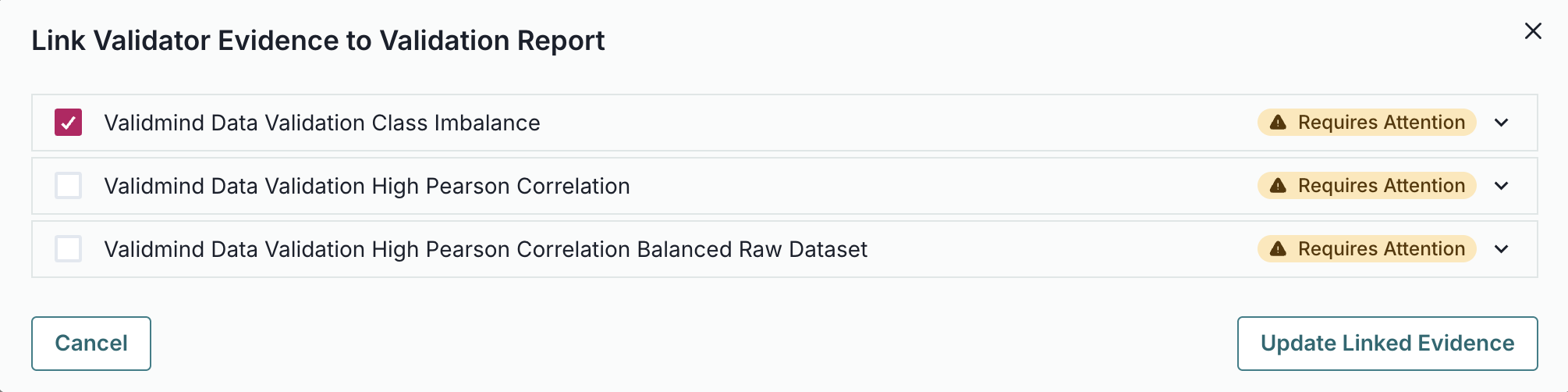

Select the Class Imbalance test results we logged: ValidMind Data Validation Class Imbalance

Click Update Linked Evidence to add the test results to the validation report.

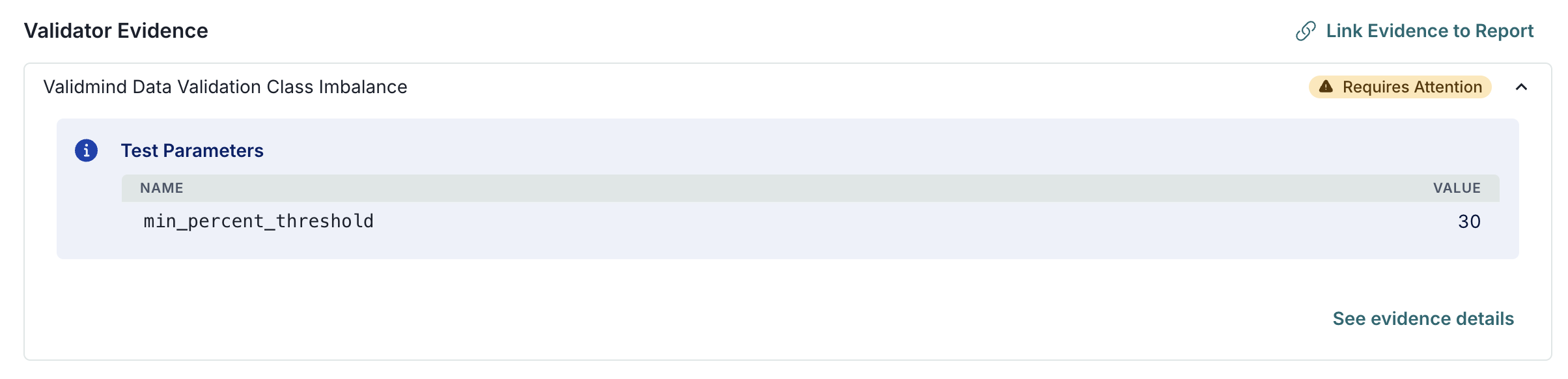

Confirm that the results for the Class Imbalance test you inserted has been correctly inserted into section 2.2.1. Data Quality of the report:

Note that these test results are flagged as Requires Attention — as they include comparative results from our initial raw dataset.

Click See evidence details to review the LLM-generated description that summarizes the test results, that confirm that our final preprocessed dataset actually passes our test:

Learn more: Work with content blocks

Split the preprocessed dataset

With our raw dataset rebalanced with highly correlated features removed, let's now spilt our dataset into train and test in preparation for model evaluation testing.

To start, let's grab the first few rows from the balanced_raw_no_age_df dataset we initialized earlier:

balanced_raw_no_age_df.head()Before training the model, we need to encode the categorical features in the dataset:

- Use the

OneHotEncoderclass from thesklearn.preprocessingmodule to encode the categorical features. - The categorical features in the dataset are

GeographyandGender.

balanced_raw_no_age_df = pd.get_dummies(

balanced_raw_no_age_df, columns=["Geography", "Gender"], drop_first=True

)

balanced_raw_no_age_df.head()Splitting our dataset into training and testing is essential for proper validation testing, as this helps assess how well the model generalizes to unseen data:

- We start by dividing our

balanced_raw_no_age_dfdataset into training and test subsets usingtrain_test_split, with 80% of the data allocated to training (train_df) and 20% to testing (test_df). - From each subset, we separate the features (all columns except "Exited") into

X_trainandX_test, and the target column ("Exited") intoy_trainandy_test.

from sklearn.model_selection import train_test_split

train_df, test_df = train_test_split(balanced_raw_no_age_df, test_size=0.20)

X_train = train_df.drop("Exited", axis=1)

y_train = train_df["Exited"]

X_test = test_df.drop("Exited", axis=1)

y_test = test_df["Exited"]Initialize the split datasets

Next, let's initialize the training and testing datasets so they are available for use:

vm_train_ds = vm.init_dataset(

input_id="train_dataset_final",

dataset=train_df,

target_column="Exited",

)

vm_test_ds = vm.init_dataset(

input_id="test_dataset_final",

dataset=test_df,

target_column="Exited",

)In summary

In this second notebook, you learned how to:

Next steps

Develop potential challenger models

Now that you're familiar with the basics of using the ValidMind Library, let's use it to develop a challenger model: 3 — Developing a potential challenger model

Copyright © 2023-2026 ValidMind Inc. All rights reserved.

Refer to LICENSE for details.

SPDX-License-Identifier: AGPL-3.0 AND ValidMind Commercial