Set thresholds and alerts

When logging a metric, you can define thresholds or use the passed parameter to flag whether the metric meets performance criteria. If a metric breaches a threshold, stakeholders receive email alert notifications.

These thresholds and alerts apply to metrics over time blocks1, helping you track model performance and identify issues more easily. Thresholds help you flag values that suggest drift, underperformance, or other types of risk—for example, by signaling low, medium, or high risk based on how a metric evolves over time.

Together, thresholds and notifications improve your visibility into model performance and compliance risk, enabling timely intervention when needed.

Prerequisites

Use a custom function

To programmatically evaluate whether a metric passes specific criteria, use a custom function:

gini = 0.75

thresholds = {

"high_risk": 0.5,

"medium_risk": 0.6,

"low_risk": 0.8,

}

def passed_fn(value):

return value >= thresholds["low_risk"]

log_metric(

key="GINI Score",

value=gini,

recorded_at=datetime(2025, 6, 10),

thresholds=thresholds,

passed=passed_fn(gini)

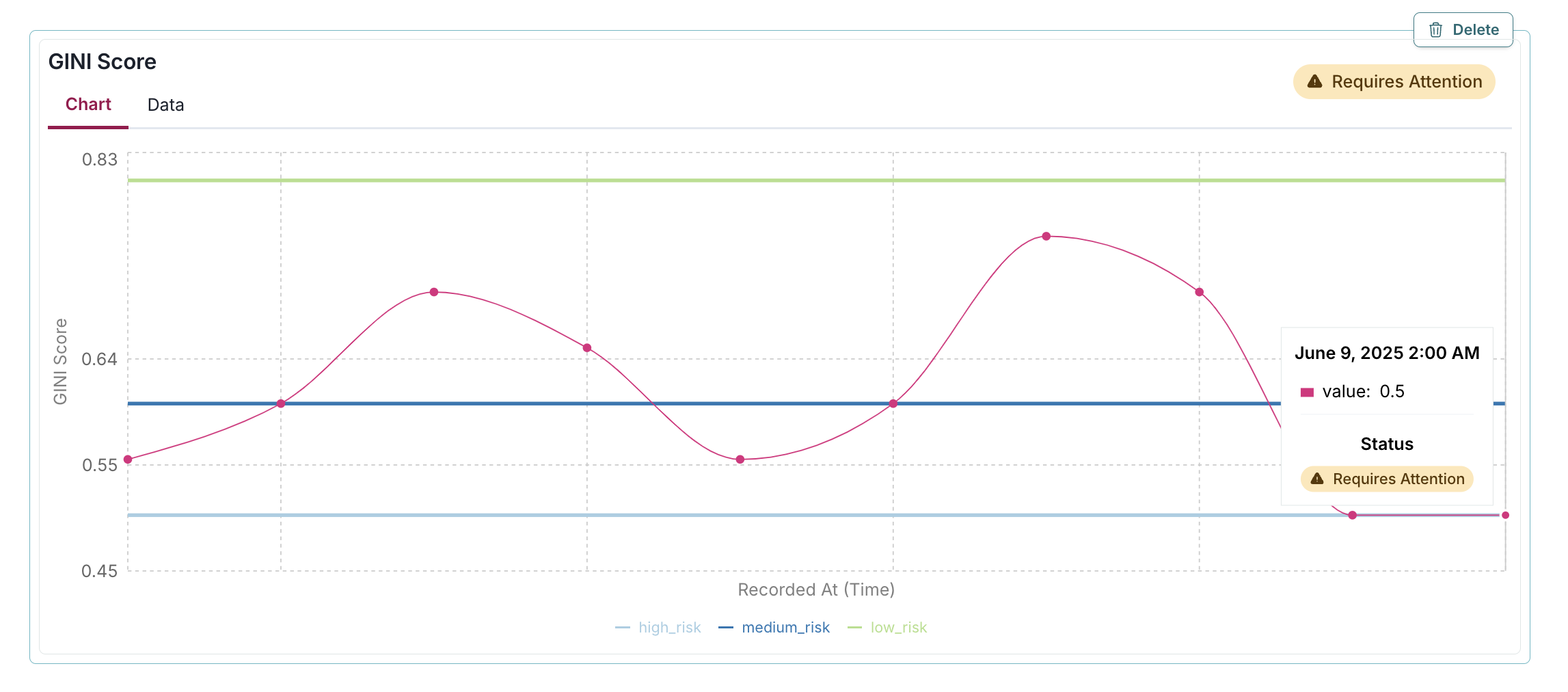

)In this example:

- Three risk thresholds are defined: high risk (

0.5), medium risk (0.6), and low risk (0.8). - The custom function evaluates if the value meets or exceeds the low risk threshold.

- The GINI value of

0.75falls between the medium risk and low risk thresholds. - Since

0.75is below0.8, the function returnsFalse, andpassed=False. - Although the passed flag is False, the metric is still classified as medium risk for threshold-based visualizations.

- This results in a Requires Attention status badge.

- The threshold classifications and

passedparameter work independently, one determines risk level visualization and the other determines the status badge.

Set the passed parameter

To flag whether a metric value meets performance criteria, set the passed value explicitly:

log_metric(

key="Test Coverage",

value=0.85,

recorded_at=datetime.now(),

thresholds={"medium_risk": 0.9},

passed=True

)In this example:

- The metric value (

0.85) is above the medium risk threshold (0.9) and the threshold is not triggered. - Setting

passed=Truedisplays a Satisfactory badge to indicate the threshold status. - Alternatively, if you need to flag a metric with Requires Attention badge, set

passed=False.

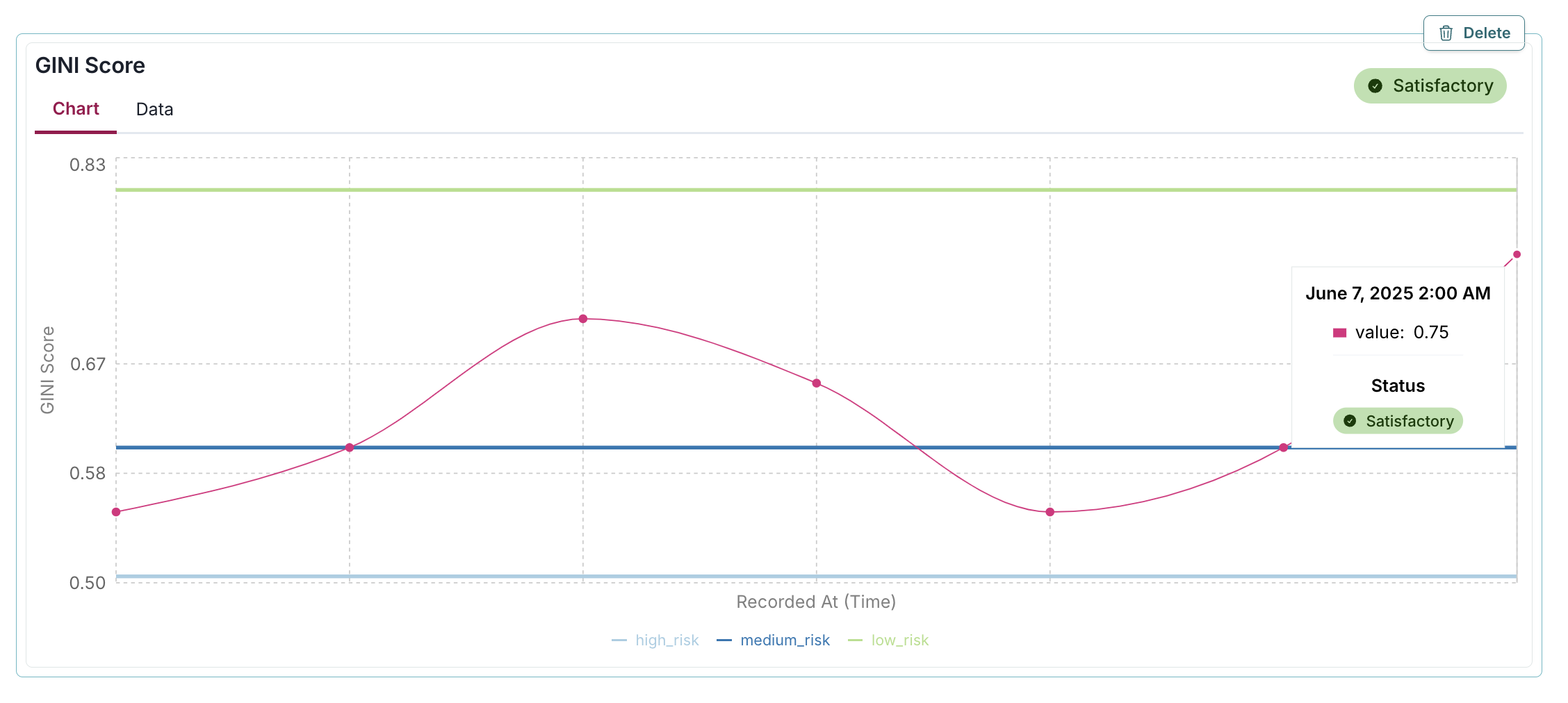

Output examples

These examples visualize GINI scores which are commonly used to evaluate classification performance, particularly in credit risk and binary classification problems.

Satisfactory

Requires Attention

Alert notifications

The passed boolean is determined by you, either through a custom function, such as passed_fn in the earlier example, or by assigning a value directly. This approach allows you to interpret the configured thresholds according to your own logic, rather than relying on automatic evaluation.

A metric is considered to have breached a threshold when its value falls within the high or medium risk range, that is, when it is below the low risk threshold (in this example, below 0.8). Breaching a threshold affects visual risk indicators and triggers alert notifications, independently of whether the passed flag is True or False.

An email is sent to model stakeholders notifying them that the model has a metric that did not pass an ongoing monitoring threshold and requires attention.

Stakeholders who receive email alert notification include:

- The model owners

- The model developers

- The validators

Responding to these notifications involves prioritizing the alerts and taking appropriate action, ideally as part of your documented ongoing monitoring plan.4

![Automated email alert from ValidMind notifying that the GINI Score for the [Test] Customer Churn model is 0.75, which falls between the medium (0.6) and low (0.8) risk thresholds, with a button to view the model.](../../guide/monitoring/automated-alert-notification-email.png)